- Compute-over-data applications enhance processing efficiency by bringing computation tasks directly to the data storage location, thereby reducing data movement.

- They bolster data privacy and security by performing computations locally, minimizing the need to transfer data.

- These applications enable faster processing and improved scalability through the use of decentralized compute and storage resources.

- By minimizing data transfer, compute-over-data applications help ensure compliance with data protection regulations.

- They are cost-effective, optimizing cloud service utilization and reducing unnecessary data movement.

Compute-over-data applications optimize data processing by performing computations directly where data is located, eliminating excessive data movement. This approach boosts processing efficiency, ensures better privacy, adheres to data protection laws, and reduces costs, particularly when using cloud services.

Key benefits include faster data processing, flexible scaling, and improved data security. They are integral in sectors like healthcare, mapping, and big data analysis for tasks such as DNA sequencing, 3D modeling, and thorough data analytics.

Technical components involve requester nodes, compute nodes, storage providers, validators, and executors. These applications signal transformative potential in data analysis and resource management.

Table of Contents

Understanding Compute-Over-Data

Compute-over-data is a new way to handle data and processing. Instead of moving data to a central place for processing, this method brings the computing work to where the data is stored.

This reduces how much data needs to be moved around and makes the processing faster and more efficient.

This approach changes the traditional method of moving data to a central computer for processing. By running computing tasks where the data is stored, we can save time and resources.

It also helps keep data more secure because it doesn’t travel as much, which means there’s less chance for sensitive information to be exposed.

Compute-over-data helps to better use computer resources. Systems can adjust how they use their computing and storage power based on what they need at any given time. This makes everything run smoother and more effectively.

There are technologies like Bacalhau and the COD framework that show how compute-over-data works in real life. For example, Bacalhau uses many computers spread out in different locations to process big sets of data where they are stored. The COD framework gives a clear plan on how to use compute-over-data in different situations.

Benefits of Compute-Over-Data Applications

Compute-over-data applications offer several benefits that contribute to improved data privacy, efficiency, cost-effectiveness, and compliance with data protection laws. Here are the key advantages:

- Enhanced Data Privacy: By performing calculations directly where the data is stored, compute-over-data applications help keep sensitive information safe and reduce the risk of data leaks. This is achieved by avoiding the need to transfer large files over the internet, minimizing the exposure of data to potential security threats.

- Improved Efficiency: Compute-over-data applications enable faster data analysis by minimizing the amount of data that needs to be moved around. By performing calculations at the storage location, there is less data transfer overhead, resulting in quicker insights and analysis.

- Cost Savings: Separating computing from data storage allows companies to adjust their computing power based on their needs without incurring excessive costs. This flexibility enables businesses to utilize cloud services or their own equipment more efficiently, optimizing resource allocation and reducing unnecessary expenses.

- Compliance with Data Protection Laws: Compute-over-data applications align with data protection laws such as the General Data Protection Regulation (GDPR) in Europe and the Health Insurance Portability and Accountability Act (HIPAA) in the United States. By keeping data in its original location during calculations, these applications ensure compliance with regulations that require strict control over how data is handled and shared.

In summary, compute-over-data applications offer benefits such as enhanced data privacy, improved efficiency, cost savings, and compliance with data protection laws.

| Benefit | Description | Outcome |

|---|---|---|

| Better Privacy | Calculations happen without moving data | Lower risk of data leaks |

| Faster Processing | Less data needs to be moved | Quicker data processing |

| Flexible Scaling | Computing power can be changed based on need | Cost-effective and adaptable |

For example, a hospital using compute-over-data applications can analyze patient records directly within their secure systems, ensuring privacy and meeting health data regulations. A tech company can use cloud services to run big calculations without needing to build expensive data centers, saving money and scaling up or down as needed.

Real-World Applications

Innovative uses of compute-over-data technologies are changing fields like mapping, healthcare, and big data analysis.

Let’s see some examples:

- In mapping, these technologies help create digital copies of real-world places and manage natural disasters. For example, by processing data from laser scans (called LiDAR) and combining it with other information, these tools can make detailed 3D models. These models help experts understand and analyze geographic areas more precisely.

- In healthcare, compute-over-data systems allow for safe and cost-effective processing of large amounts of data. This is important for tasks like reading DNA sequences, medical imaging (such as X-rays or MRIs), and creating personalized treatment plans. These systems ensure that patient data remains private and that calculations are done quickly.

- For big data analysis, compute-over-data methods improve how data is processed. Instead of moving data to where the computing happens, these methods run computing tasks right where the data is stored. This reduces the workload of managing data and boosts processing speed.

Core Parts of Compute-Over-Data Frameworks

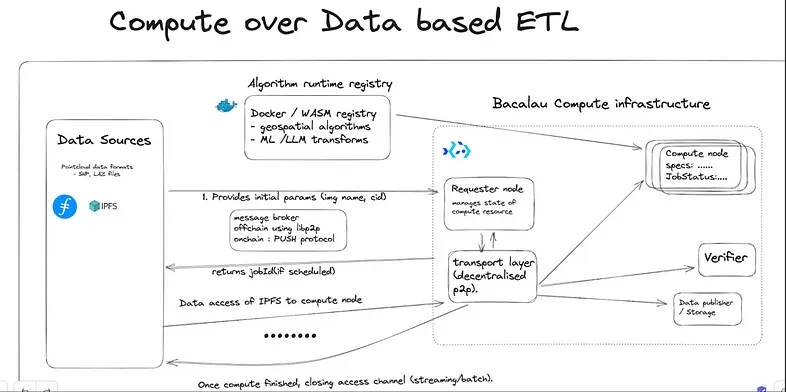

Compute-over-data frameworks have several key parts that make them work. These parts include Requester Nodes, Compute Nodes, Validators, Executors, and Storage Providers.

Let’s break down what each part does using simple language and examples.

- Requester Nodes are like the directors of a movie. They start the compute jobs and say what datasets are needed. For example, if you want to analyze weather patterns, the Requester Node would ask for the weather data. They make sure the right data goes to the right Compute Nodes for processing.

- Compute Nodes do the heavy lifting. They are like the actors in the movie who perform the tasks. These nodes get the data from Storage Providers and carry out the computation tasks. For instance, if the task is to find the average temperature from a set of data, the Compute Node will do the math.

- Storage Providers, such as IPFS (InterPlanetary File System) and Filecoin, are like libraries. They store large amounts of data safely and make it easy to access.

- Validators are like quality checkers. They make sure that the Compute Nodes have done their work correctly. For example, if a Compute Node says the average temperature is 75 degrees, Validators will check if this number is right. This adds trust and reliability to the whole process.

- Executors are like project managers. They handle the execution of tasks that have been verified. For instance, if a task needs to be completed in a certain time, Executors make sure it happens as planned.

Future Implications

Building on the basic ideas of compute-over-data frameworks, the future of this design looks promising for data analysis and resource management.

This way of separating computing tasks from data storage allows organizations to quickly adjust and use resources more efficiently, based on what is needed at the moment.

Open data standards are very important because they ensure that data can be accessed and used by anyone. This makes it easier for different cloud services to work together.

With open standards, data can be shared and used across different platforms and applications. This helps drive new ideas and teamwork.

The move towards separating computing from data has several key benefits:

- Better Efficiency: By keeping computing tasks separate from where data is stored, organizations can handle tasks more smoothly. This can reduce delays and speed up processing times. For example, a company analyzing large amounts of sales data can do so faster if they can quickly allocate computing resources as needed.

- Lower Costs: Using resources more wisely means spending less money. If a business only uses the computing power it needs, it can save on operational costs. For instance, an online retailer can save money by scaling up computing resources during high-traffic times and scaling down when traffic is low.

- Improved Resource Use: This design ensures that resources are not wasted. Instead of having computing power sit idle, it can be put to use where it’s needed most. For example, a research lab can use its computing resources for different projects as they arise, rather than having them tied to one static task.

Frequently Asked Questions

How Does Compute-Over-Data Improve Data Security?

Compute-over-data improves data security by keeping sensitive data in its original location, reducing transfer risks, minimizing exposure to unauthorized parties, enhancing encryption effectiveness, and decreasing the need for data replication, thereby mitigating potential leaks.

What Industries Benefit the Most From Compute-Over-Data Applications?

Industries benefiting the most from compute-over-data applications include healthcare (personalized medicine, disease prediction), finance (fraud detection, risk assessment), retail (customer segmentation, demand forecasting), and manufacturing (predictive maintenance, quality control).

Are There Any Specific Programming Languages Best Suited for Compute-Over-Data?

Python, R, and SQL are highly suited for compute-over-data applications. Python excels with libraries like Pandas and NumPy, R is best for statistical analysis, and SQL is indispensable for database querying and manipulation.

How Does Compute-Over-Data Compare With Traditional Data Processing Methods?

Compute-over-data reduces latency and costs by processing data where it resides, unlike traditional methods that transfer data to compute resources. This approach boosts security, minimizes breaches, and is more efficient for large-scale datasets, such as geospatial analysis.

What Are the Potential Challenges in Implementing Compute-Over-Data Applications?

Implementing compute-over-data applications poses challenges such as ensuring data security, maintaining privacy, optimizing data locality, balancing performance with data transfer speeds, complying with regulations, preserving data integrity, and efficiently managing resource allocation and data distribution.

Conclusion

Compute-over-data applications enable computation to occur directly at the location of the data, offering benefits like reduced latency, enhanced security, and better resource utilization. This approach is crucial in cloud and edge computing, as well as data-intensive research.

Essential technical components include data locality, efficient algorithms, and robust infrastructure. Future advancements in AI, machine learning, and real-time analytics will further enhance computational efficiencies.

To leverage these benefits, consider integrating compute-over-data strategies into your data processing workflows today.

- What Is Proof-of-Validation?

- What Is a Phishing Attack?

- What is Theta EdgeCloud And Why Should You Care?

- What does “contract ownership is renounced” mean?

- What Does LP Burn in Solana Tokens Mean?

Previous Articles:

- Exploring the Intersection of Crypto and AI: A New Frontier

- Grass Announces Epoch 7, Final Phase of Closed Beta for Open Internet Web Crawl

- Investor Optimism Grows as Solana Shows Signs of Potential Rebound

- BOME Price Provides Optimism Amid Market Fluctuations and Weak Competition

- Judge Concludes Discovery Phase in Binance vs. SEC Case